Fifteen Years Where Latency Is the Product

What network reliability, voice infrastructure, and edge telemetry taught me about building systems

I never set out to become a real-time systems engineer. But looking back at fifteen years of building infrastructure, every role pushed me deeper into the spaces between packets, focusing on the milliseconds of latency that separate good systems from failure.

Learning What Reliability Actually Means

My education in real-time systems was kickstarted at Comcast. As a Senior Reliability Engineer, I was responsible for keeping Xfinity Business Internet running and ensuring NBC Sports stayed stable during national broadcasts. The stakes were immediate. When millions of viewers tune in for Sunday Night Football, there is no buffer, no retry, no “we’ll fix it in the next sprint.” The system works or it doesn’t, and everyone knows which one happened.

I built event-driven monitoring and auto-remediation systems using Kafka, RabbitMQ, and Redis. We cut Xfinity Business Internet downtime by half. I drove enterprise-wide Splunk adoption, wiring automated playbooks that slashed incident detection and resolution time. But the real lesson wasn’t technical. It was understanding that observability isn’t a feature you bolt on later. It’s the foundation everything else depends on.

That lesson would follow me everywhere.

The Unforgiving and World of Voice

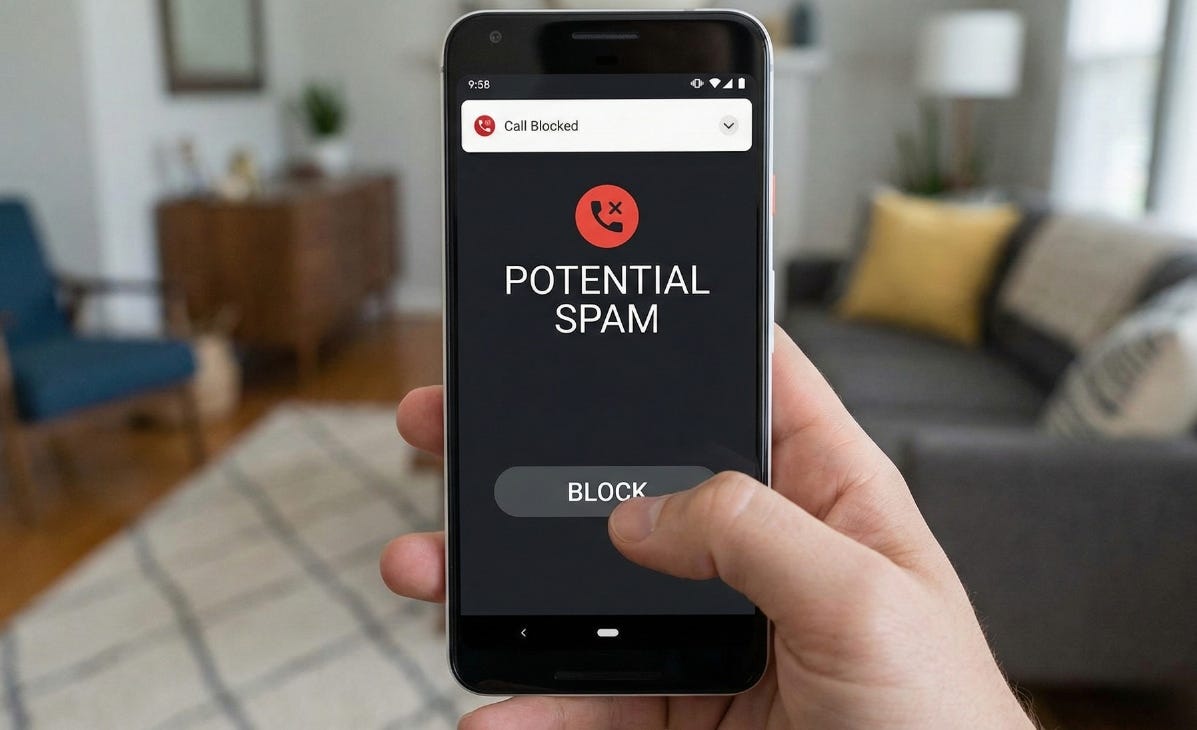

From Comcast I moved to TelTech, the company behind Robokiller. If you’ve ever wondered why voice infrastructure is considered hard, spend a day debugging complex SIP routing issues while your carriers point fingers at each other.

Voice is different from other backend work. You can buffer video. You can retry HTTP requests. But voice is now or never. Every millisecond of latency accumulates into awkward conversation. One-sided audio, garbled codecs, or even modest jitter destroying comprehension. Packet loss that would be invisible in a web app becomes a dropped syllable that changes the meaning of a sentence.

At TelTech I worked on the infrastructure that powered Robokiller’s call blocking for 12 million users. The system processed over 10 million minutes monthly, analyzing calls in real-time to determine if they were spam. Sub-second response times weren’t a nice-to-have. They were the product.

I learned SIP, RTP, and the weird edge cases that come with bridging VoIP to the legacy telephone network. Carriers use different codecs, strange signaling headers, and inconsistent DTMF handling. The modern VoIP world and the old PSTN world don’t speak the same language, and someone has to translate.

That someone became me when I cofounded a telephony startup as CTO. We rebuilt the voice infrastructure from scratch, creating a mobile SDK that handled SIP and RTP natively. This gave us full control over call routing regardless of which carrier or provider sat upstream. When you own the protocol layer, you’re not dependent on anyone’s roadmap. You can optimize the entire path, swap providers without rewriting the app, and debug issues end-to-end instead of opening tickets with three different vendors who all blame each other.

The technical challenge was real, but the insight was strategic: vendor flexibility isn’t just about cost savings. It’s about owning your own destiny.

Same Problems, Different Domain

After the telephony startup, I joined Take-Two Interactive to lead backend platform engineering for their mobile games division, including titles like Two Dots. Different industry, same fundamental demands.

Gaming has its own version of “now or never.” When a player taps the screen, they expect immediate feedback. When an engagement event fires, it needs to reach the analytics pipeline instantly or the data loses value. I built an event-driven engagement platform that doubled daily session frequency and drove a 20% revenue increase through improved player retention.

The work confirmed something I’d suspected: the pattern recognition transfers. Real-time voice, real-time gaming, real-time anything. The protocols differ but the physics of latency are universal. You learn to think in milliseconds, to trace packets through systems, to find the bottleneck that’s adding 50ms nobody accounted for.

Hardware Meets Software at the Edge

Then came Vapor IO, where everything got harder.

Vapor IO operates edge data centers, computing infrastructure deployed at the base of cell towers and in urban cores rather than centralized cloud regions. The pitch is simple: put compute closer to users and latency drops. The reality is complicated. Edge environments have thermal constraints, power limitations, and connectivity that makes cloud networking look trivial.

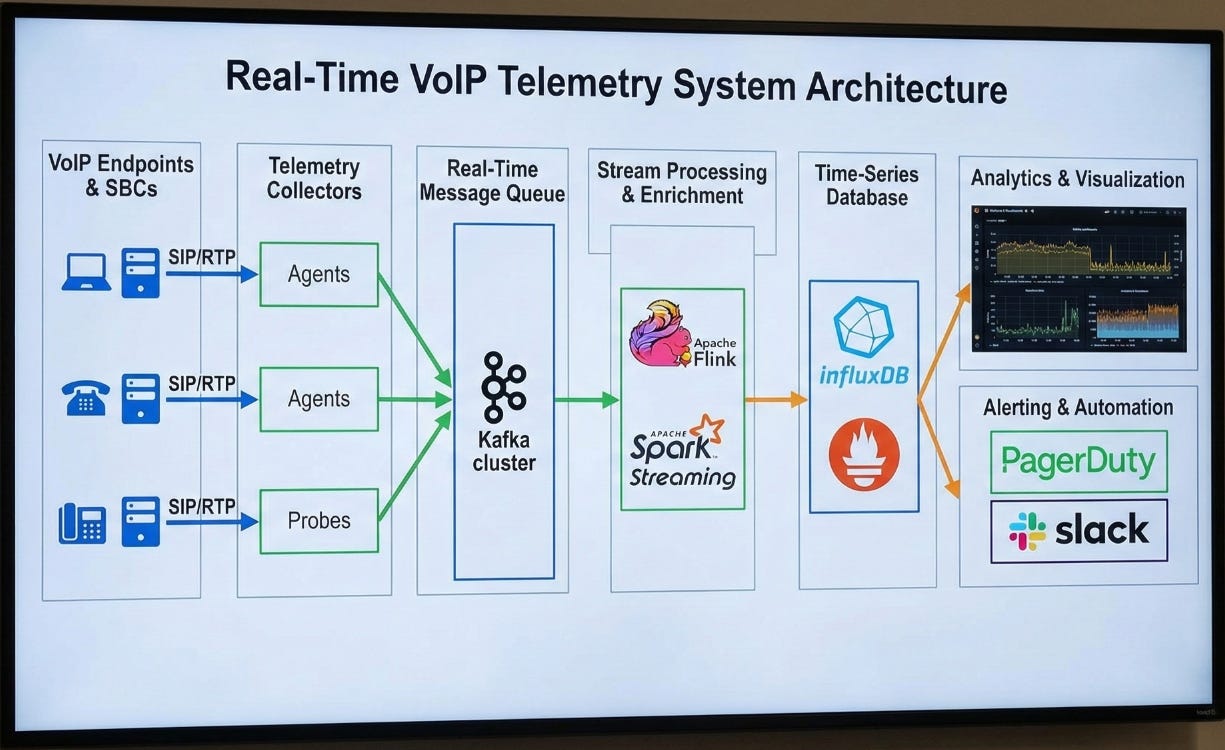

I joined as VP of Engineering and inherited Synse, a proprietary telemetry system built to monitor the physical infrastructure. Temperature sensors, power distribution units, fan controllers. Hardware that doesn’t speak HTTP or know what Kubernetes is.

Synse worked. We ran it across 30+ edge data centers, collecting telemetry from hardware that ranged from standard server racks to experimental GPU clusters. I helped build Zero Gap AI, a platform we developed with NVIDIA using GH200 Grace Hopper systems for AI workloads at the edge. Observability wasn’t optional when you’re running $50,000 GPUs in a cell tower enclosure with constrained cooling.

But Synse was proprietary. It solved our problems but created its own silo. The more I worked with it, the more I came to appreciate what open standards offer: interoperability, community knowledge, vendor flexibility. The same lesson I’d learned in telephony, showing up in a different form.

I started embracing OpenTelemetry, the open standard for observability data. Not because Synse was bad, but because I’d seen this pattern before. Proprietary systems create lock-in. They make you dependent on internal roadmaps. They isolate you from the broader ecosystem of tools and talent.

The shift wasn’t about abandoning what we’d built. It was about recognizing that the best infrastructure connects systems rather than creating new walls between them.

What Comes Next

Fifteen years of building real-time systems. Reliability engineering at Comcast. Voice infrastructure at TelTech and my own startup. Event systems at Take-Two. Edge telemetry at Vapor IO. Each role taught me something about latency, observability, and the value of owning your own stack while remaining compatible with everyone else’s.

After fifteen years of connecting systems that weren’t designed to talk to each other, I’ve started to see infrastructure differently. The best tools don’t create new kingdoms. They build bridges.